|

I am a Member of Technical Staff at Microsoft AI in Zürich, where I work on multimodal large language models. Before joining Microsoft AI, I was a research scientist at Google Brain and later Google DeepMind. Earlier, I received a PhD from Harvard University, working with Christopher Harvey. My thesis focused on the representation of visual and action-related information in the mammalian cerebral cortex. Before that, I studied neuroscience at ETH Zürich and biochemistry at the University of Cambridge. |

|

|

I work on vision-language models and multimodal large language models, with a focus on spatial understanding and localization. Besides my research work, I have collaborated with product teams across Alphabet on integrating state-of-the-art deep learning models into applications covering a wide range of industries. |

|

For a full list, see Google Scholar. |

|

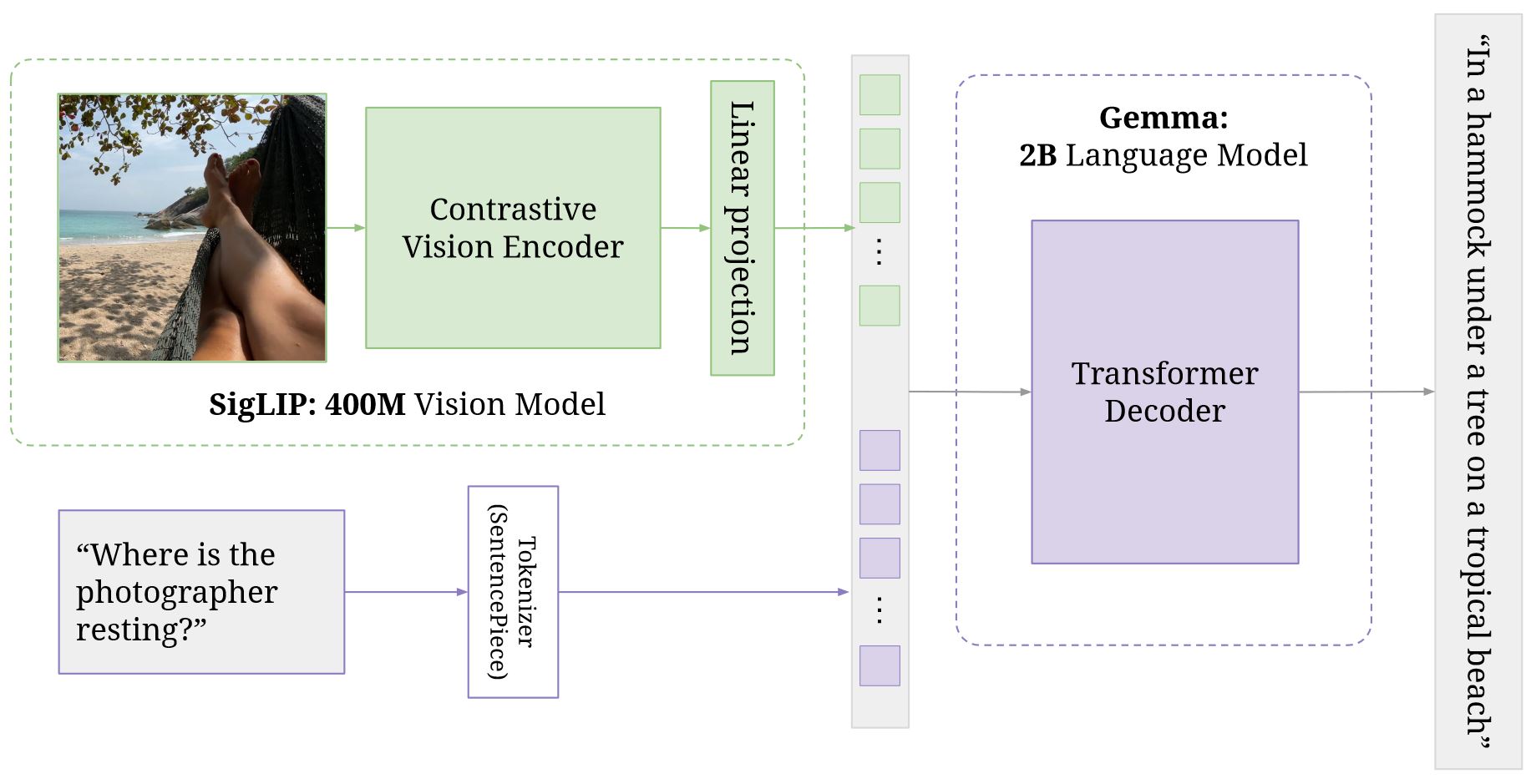

Lucas Beyer, Andreas Steiner, André Susano Pinto, Alexander Kolesnikov, Xiao Wang, et al. preprint, 2024 PaliGemma is an open Vision-Language Model (VLM) that is based on the SigLIP-So400m vision encoder and the Gemma-2B language model. I contributed to the model's spatial understanding capabilities. |

|

|

Tim Salzmann, Markus Ryll, Alex Bewley, Matthias Minderer ECCV, 2024 We propose a simple end-to-end architecture for detecting objects and their visual relationships. |

|

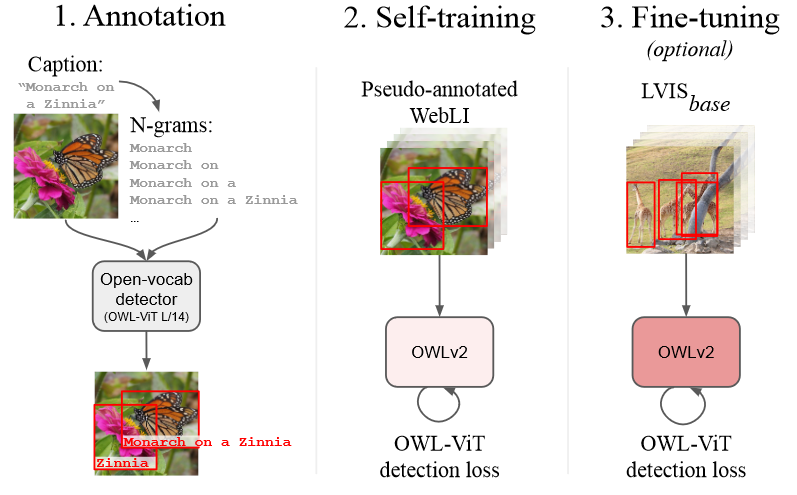

Matthias Minderer, Alexey Gritsenko, Neil Houlsby NeurIPS, 2023 We scale open-vocabulary object detection through self-training on pseudo-labeled web image-text data. |

|

Matthias Minderer, Alexey Gritsenko, Austin Stone, Maxim Neumann, Dirk Weissenborn, Alexey Dosovitskiy, Aravindh Mahendran, Anurag Arnab, Mostafa Dehghani, Zhuoran Shen, Xiao Wang, Xiaohua Zhai, Thomas Kipf, Neil Houlsby ECCV, 2022 We develop a simple transformer-based architecture and training recipe that achieves strong performance in open-vocabulary object detection. |

|

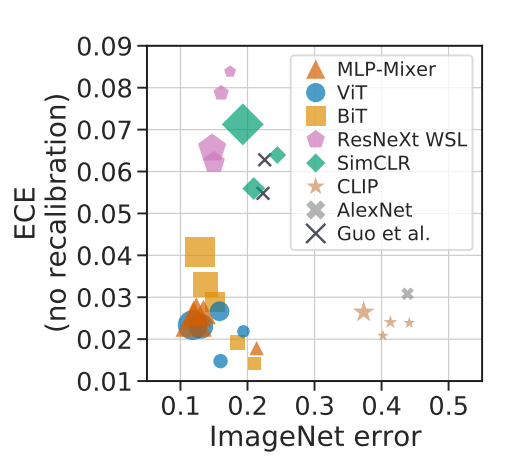

Matthias Minderer, Josip Djolonga, Rob Romijnders, Frances Hubis, Xiaohua Zhai, Neil Houlsby, Dustin Tran, Mario Lucic NeurIPS, 2021 We study the uncertainty calibration and its relationship with accuracy of recent state-of-the-art image classification models. |

|

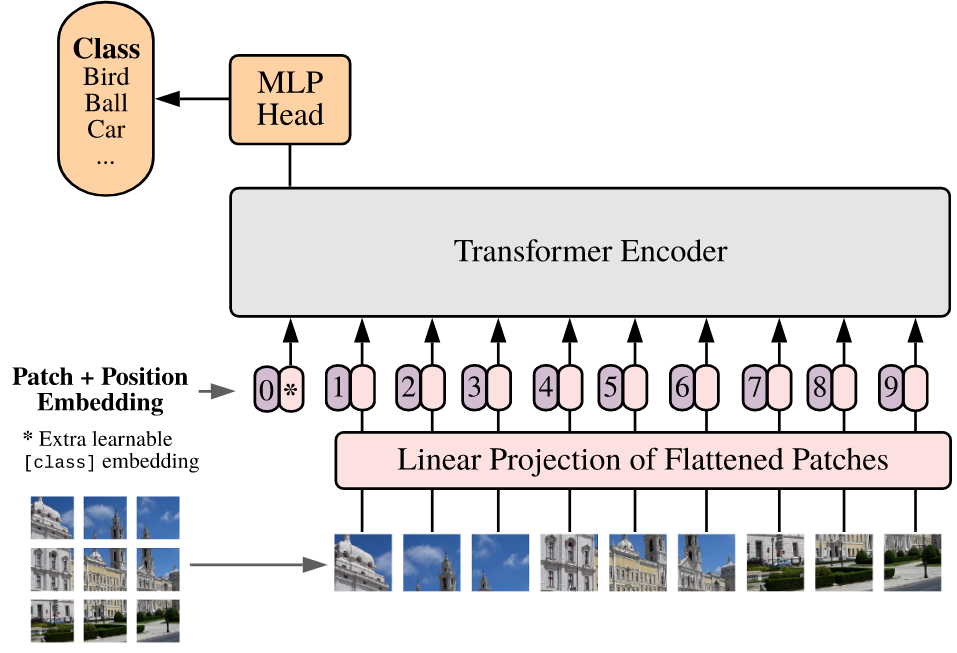

Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, Neil Houlsby ICLR, 2021 We show that a pure transformer, applied directly to sequences of image patches, can perform very well on image classification tasks. |

|

Matthias Minderer, Olivier Bachem, Neil Houlsby, Michael Tschannen ICML, 2020 We tackle the problem of low-level shortcuts in self-supervised learning by training an adversarial "lens" to remove shortcut features from images. |

|

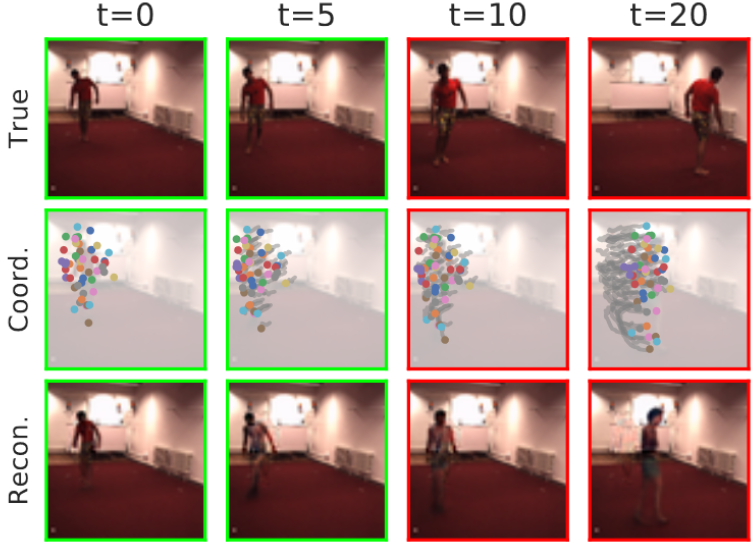

Matthias Minderer, Chen Sun, Ruben Villegas, Kevin Murphy, Honglak Lee NeurIPS, 2019 By using a spatially structured (keypoint-based) image representation, we improve video prediction quality and the usefulness of the learned video representations. |

|

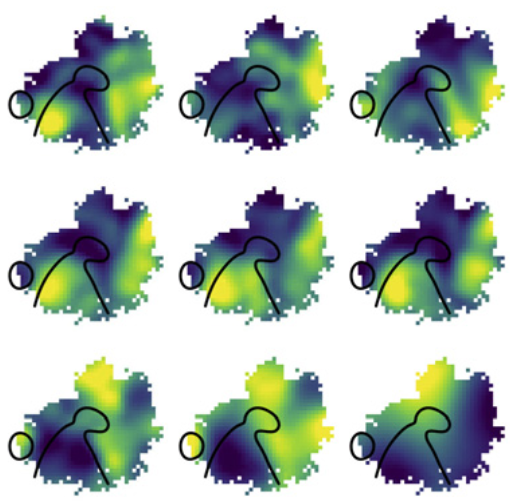

Matthias Minderer, Kristen Brown, Christopher Harvey Neuron, 2019 Using large-scale neural recordings and deep models of neural encoding, we show that navigation-related information is distributed and varies gradually across large parts of the posterior cortex, even across retinotopic boundaries. |

|

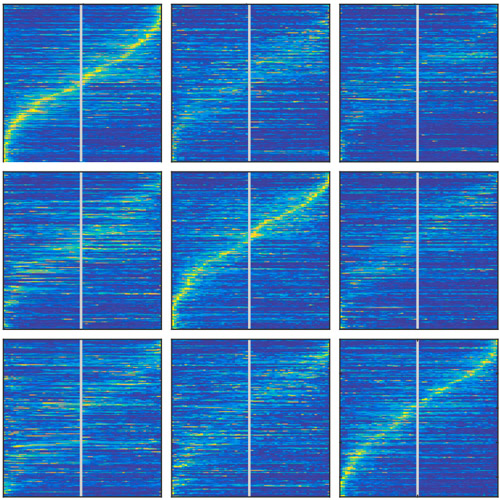

Laura Driscoll, Noah Pettit, Matthias Minderer, Selmaan Chettih, Christopher Harvey Cell, 2017 Contrary to the idea that representations of sensory stimuli or the activity patterns that accompany motor actions are stable, neuronal representations in the parietal cortex can change across days, possibly allowing for the tradeoff between stable encoding of information and flexibility for incorporating new information. |

|

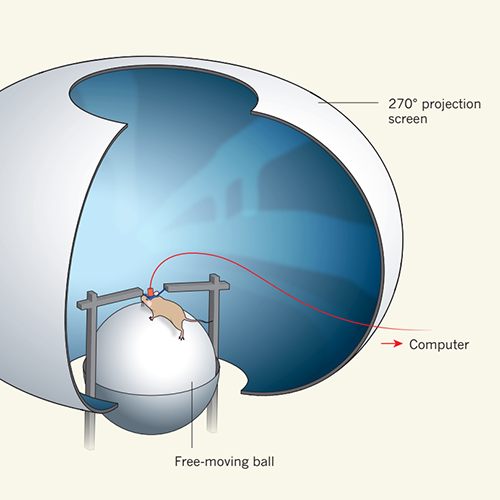

Matthias Minderer, Christopher Harvey, Flavio Donato, Edvard Moser Nature, 2016 We discuss the advantages of using virtual reality to study sensorimotor representations in the brain. |

|

Matthias Minderer, Wenrui Liu, Lazar Sumanovski, Sebastian Kügler, Fritjof Helmchen, David Margolis J Phys, 2012 We present a method for fast fluorescence imaging of map-level cortical activity using a calcium indicator protein. Sensory-evoked neuronal activity can be imaged repeatedly in the same mouse over weeks, enabling new opportunities for the longitudinal study of cortical function and dysfunction. |

|

Website design by Jon Barron. |